Artificial Intelligence must be a supplement, not the replacement of human knowledge

Get the Point!

As a life long lover of history, literature, and politics, and as one blessed with the ability to quickly recall events from my past or knowledge I've acquired through the years by reading, I begin today's column by noting that Artificial Intelligence (AI) surely has a valuable place in the world of “nerdom.” From the advent of search engines like Google, Westlaw/Lexus, and today, ChatGPT, these modern technological advances are truly magnificent for those who love gaining knowledge in every single field of human intellectual endeavor.

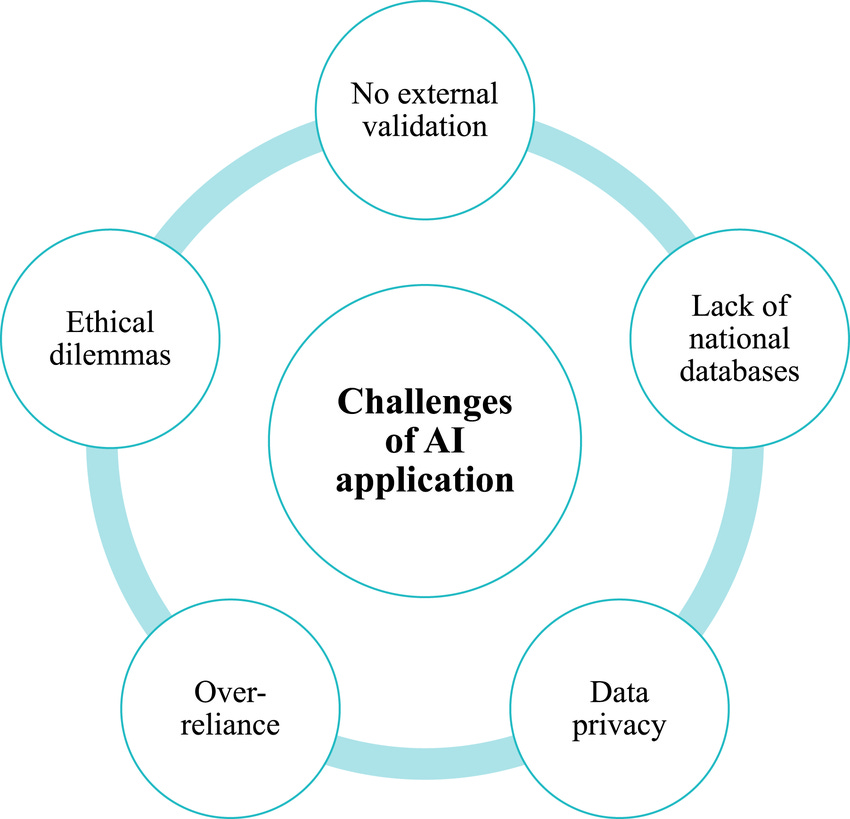

And yet, like many academic, industrial, and political leaders, I harbor serious concerns about the potential abuses of AI, including an over reliance that not only yields occasional inaccuracies that can be frustrating, but is also fraught with the danger of replacing the age old human element of discerning right from wrong!

Last night, while scrolling on my Facebook feed I stumbled across an AI generated reel that referenced "notable Black celebrities who died in 2024." The short video montage marked the passings of screen legend James Earl Jones and music icon Quincy Jones, both of whom died last year. But then the montage started to mess up by incorrectly mourning the 2024 passings of actress Nichelle Nichols, who died in 2022, actor Michael Williams, who died in 2021, the legendary Cicely Tyson, who also died in 2021, and the equally legendary Ruby Dee, who departed this life way back in 2014!

The late Michael Williams, depicted in his iconic role as Omar Little on HBO’s critically acclaimed series “The Wire,” died in 2021—not 2024!

Now some may call me a pedant and dismiss these assertions as the waxings of an aging man who is slowly becoming a grumpy "get off my lawn" curmudgeon. But I assure you that it's deeper than that because my fear is that anyone who watches that reel about famous celebrity deaths and doesn't know any better may accept what was shown and said as the gospel truth! And by so doing, the user may submit that information in a discussion, debate, or Heaven forbid, in an academic paper or quiz bowl competition and be DEAD wrong!

The same holds true for AI generated white papers, factoids, and legal briefs that are presented without the presenter taking time to cross check for accuracy. To this point, I remember when I was an adjunct professor teaching political science and introduction to criminal law courses at Florida A&M University from 2000-2010, that the expansion of the Internet as a research tool occasionally led to students submitting research papers and critiques as original scholarship when, to my dissatisfaction and their grades chagrin, they had copied and pasted the same information that several of their other classmates had found via the same Internet searches.

Today, some of my friends who teach on the post-secondary and professional school levels say that the aforementioned form of plagiarism is only getting worse, a reality that is frightening when considering that future generations of professionals may not pick up the critical thinking skills that come from reading multiple sources for themselves in order to best understand, compare, and contrast the issues germane to their work world!

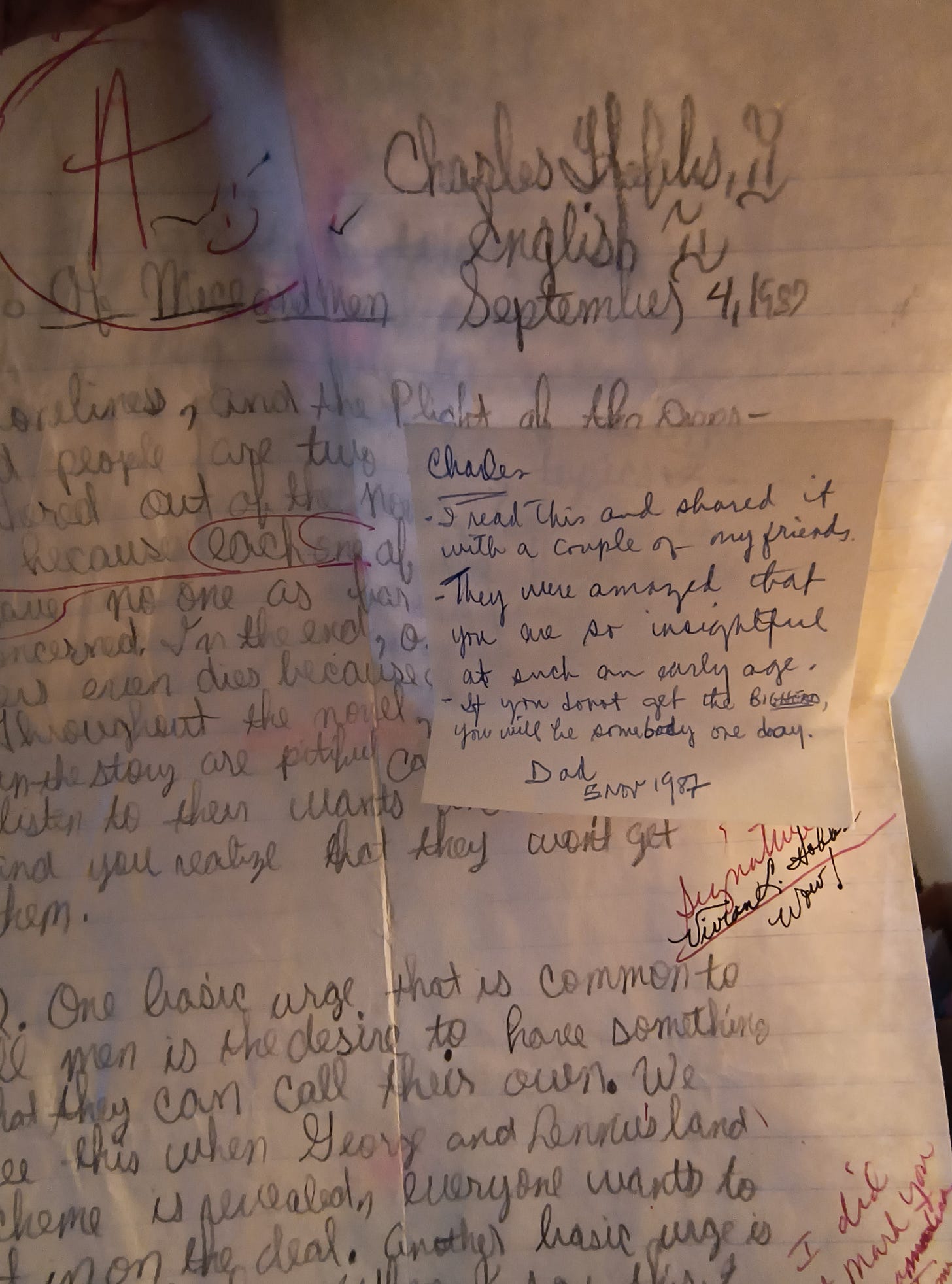

Photo of an “A” paper that I wrote in class about John Steinbeck's classic “Of Mice and Men” back in 1987. Perhaps it is time for teachers to go back to forcing students to write in class—by hand—to avoid the AI plagiarism bug?

Now, the days of students having to ride their bicycles to the local libraries in Tallahassee, like I did as a kid, or walk through the horrific heat or bone chilling winds in Atlanta to research in the special collections section of the Woodruff Library that served the AUC (or catch the MARTA to conduct research over at Georgia State or Georgia Tech's libraries), as I did as a young adult, are long gone.

But what's not gone, and what's even more necessary than ever, is for people to have the intellectual acuity to separate fact from fiction more quickly and accurately than what a computer generated program concludes is "truth."

Another concern that I have with the over reliance on AI is that machines can inherit the biases of their creators. Meaning, if the programmer takes the historical viewpoint that European colonial conquests in Africa, Asia, the Middle East and the Western Hemisphere were "ordained by the Judeo-Christian God" and justifiable no matter the miseries, murders, and mayhem inflicted upon local populations, the reader of such conclusions must have significant enough starting knowledge to counter that those local populations saw European conquests in a different light!

Indeed, to this very day, wars and rumors of wars in the above mentioned regions still are largely the result of global white supremacy cloaked in the Cross of Jesus that's been exported for over six centuries! Sadly, I fear that such information input biases will expand—not contract—forms of discrimination in the delivery of medical insurance and services, bank lending, hiring, and as the legal profession is increasingly infiltrated by the AI revolution, further imbalances on the legal scales of justice—all because too many humans are too reliant upon allowing their computers to put in ALL of the intellectual work!

Separately, the over automation of information also poses the significant risk of making an increasingly less empathetic society even more indifferent to the conditions and feelings of those who may look different, pray differently, and love in ways that are contrary to our own customs and mores. Such reminds me of the chilling conclusions of Lt. Colonel Lt. Colonel Douglas M. Kelley, the chief American psychiatrist who examined the Nazi leaders who were arrested and tried for war crimes in Nuremberg following World War II. Kelley wrote,

“In my work with the defendants, I was searching for the nature of evil and I now think I have come close to defining it. A lack of empathy. It’s the one characteristic that connects all the defendants, a genuine incapacity to feel with their fellow men. Evil, I think, is the absence of empathy.”

Leaders of the Nazi High Command, including Air Force leader Hermann Goehring and Army leader Alfred Jodl, listen to testimony during their trial in 1946.

Indeed, to avoid the banality of evil, we need to develop thinkers who see AI as a supplement—not the replacement of careful research, assiduous study, and fervent prayers and meditations to better understand our past and our collective present—all in furtherance of shaping a better future.

Also, don't forget the bias that is rampant across the platforms and LLMs. There are few interesting reads that focus on this and some research by a young black lady out of MIT's graduate program on this issue.

This is a welcomed detour from the daily barrage of geopolitical discussions. Truly a topic to be given more attention to.